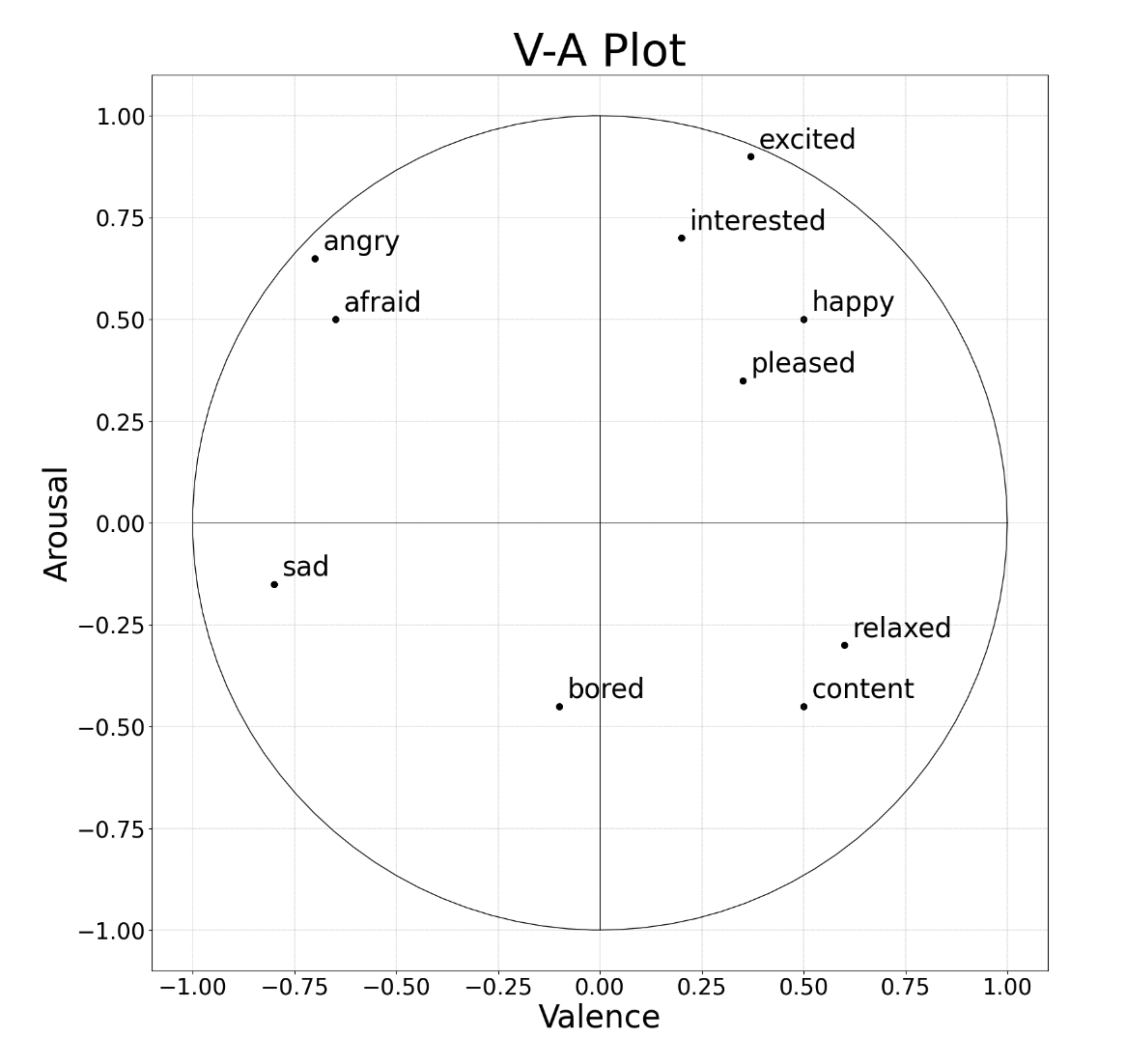

EmotionGUI is a user-intuitive tool for the visualisation and annotation of emotions in speech and video signals. This tool is currently intented for Research purposes that will potentially be useful for speech based technologies in the future. The tool is based on the Valence-Arousal (VA) two-dimensional emotion model from psychology and enables multiple users to annotate emotions in a simple and efficient way.

Valence - The intensity(Exciting ------- Calm) of an emotion

Arousal - How positive(Happy) or negative(Sad) an emotion is

EmotionGUI now consists of three sections: Visualise, Annotation, and Live-Audio. This research tool aims to address the need for a user-intuitive tool that can facilitate annotation by multiple users and is open-sourced for future development. The web-based GUI is built using html, css, javascript and pyscript(python add-on for html).

If you are testing this site please download this link before continuing with the web evaluation survey.

Here is what you can do with 'EmotionGUI'.

The annotation webpage offers three distinct approaches for annotating emotions in speech, each applying a different emotion model.

These models can be easily switched between tabs and are categorised as discrete/categorical, 1-D, and 2-D emotion models.

In the 1D model, emotions are associated with 3 key dimensions:

You have the flexibility to work with any of these emotion dimensions based on your preferences.

2D model employs a Valence-Arousal (V-A) plot to identify emotions. This model uses a graph with Arousal (y axis) and Valence (x axis) to plot emotions and provide a visual representation.

The Visualise webpage offers functionality to users to visualise speech emotions that is encodes as csv data or wav dat on a Valence-Arousal Plot.

Allows the user to record live audio clips that can be used within the website itself or for other purposes.

The Speech Research Group at the University of Auckland consists of engineers, linguists, and educational

researchers focussing on different aspects of speech research.

For more information visit the official website for UoA Speech Research Group.